RadianceVR

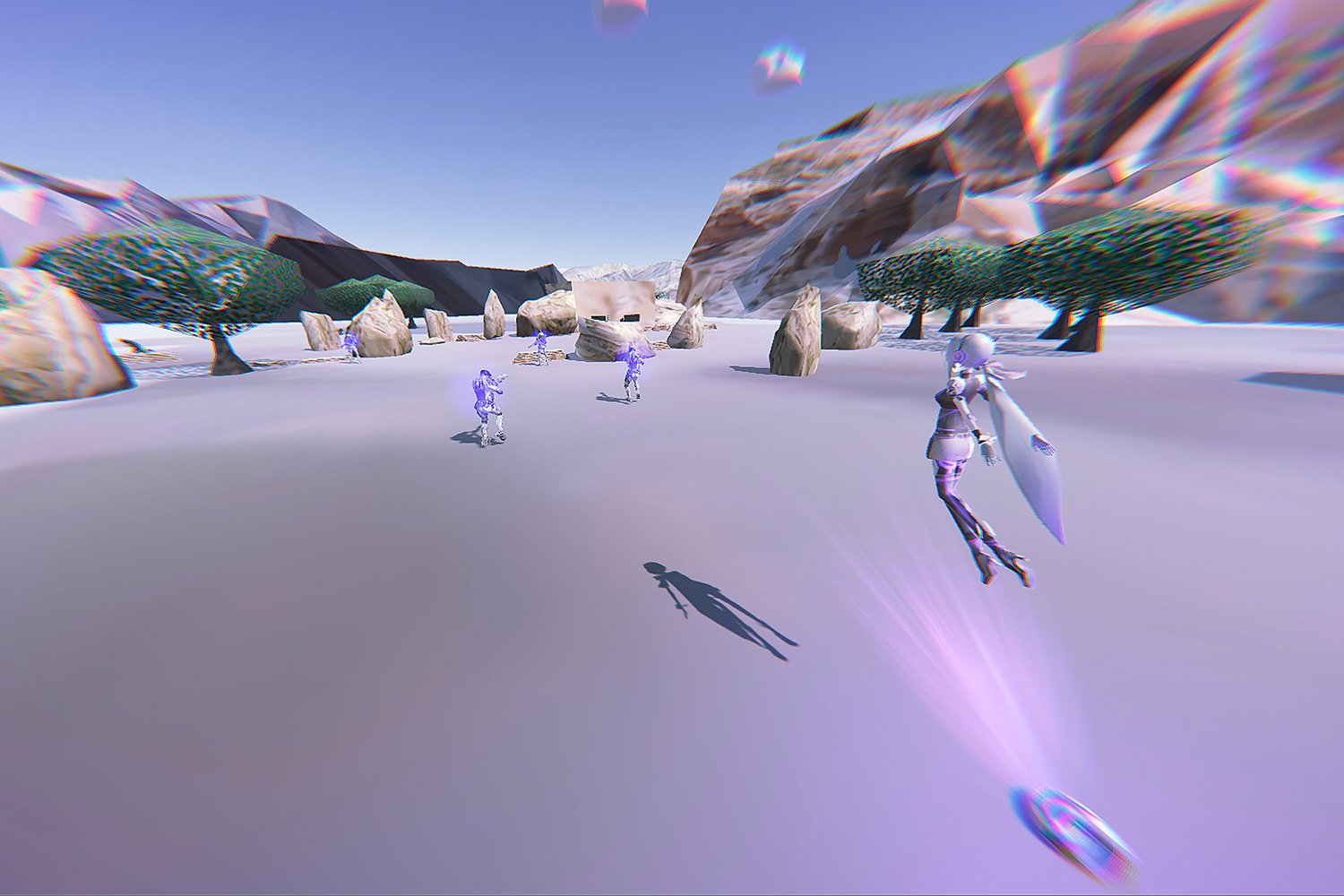

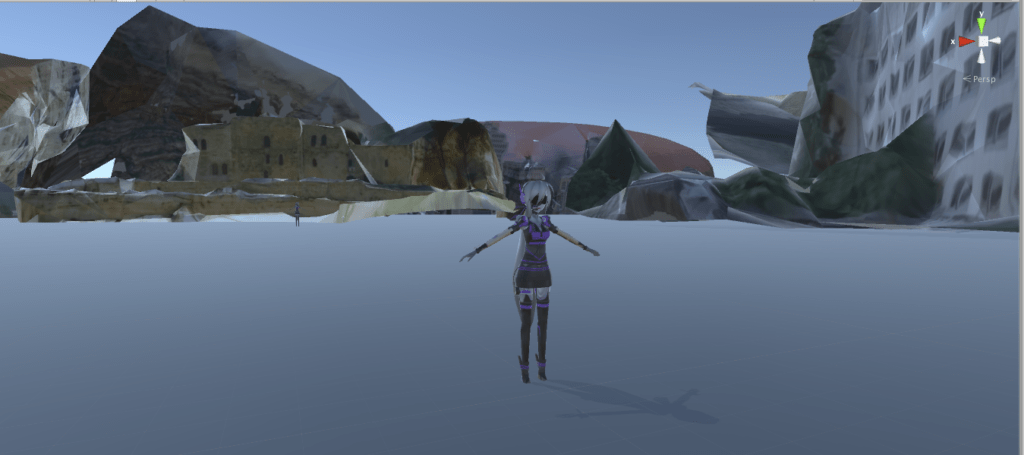

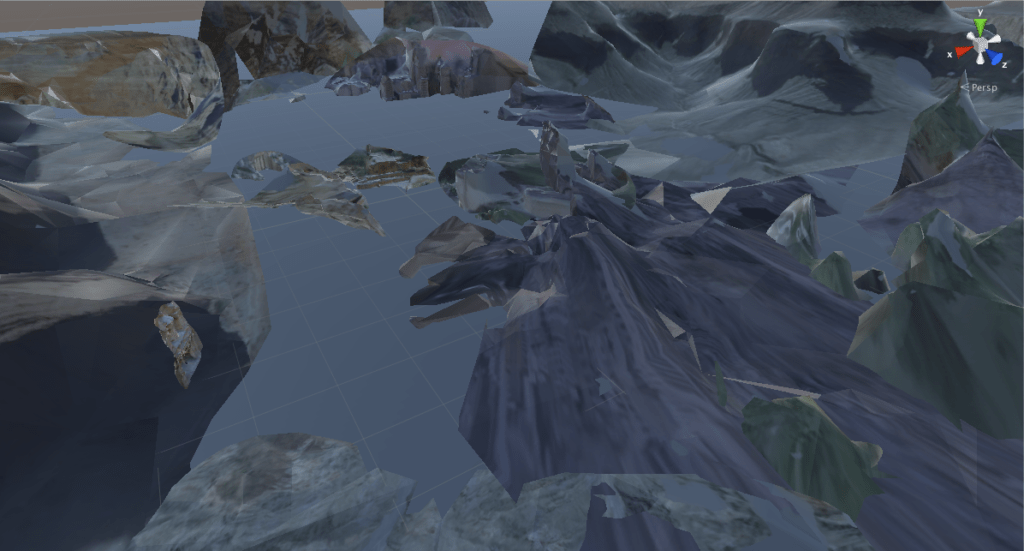

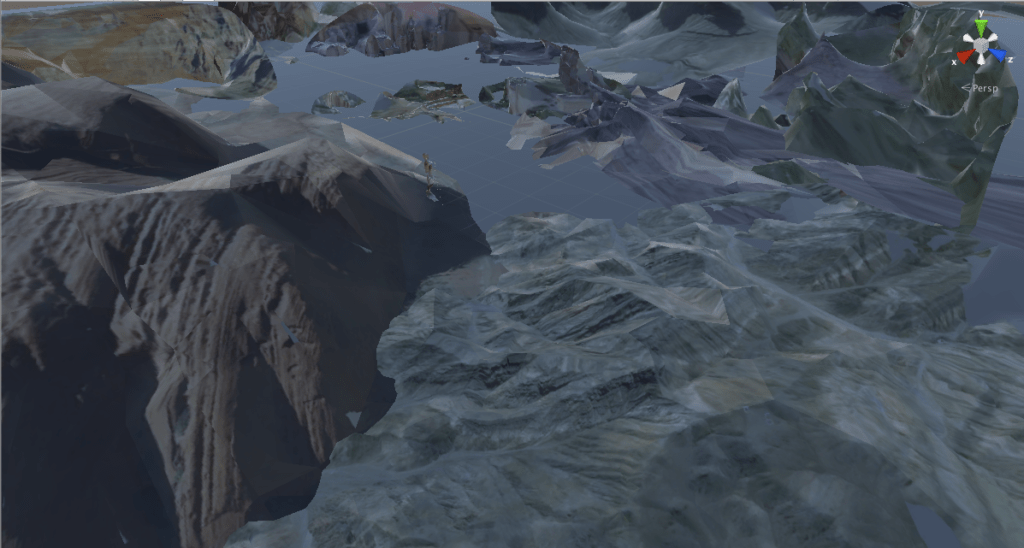

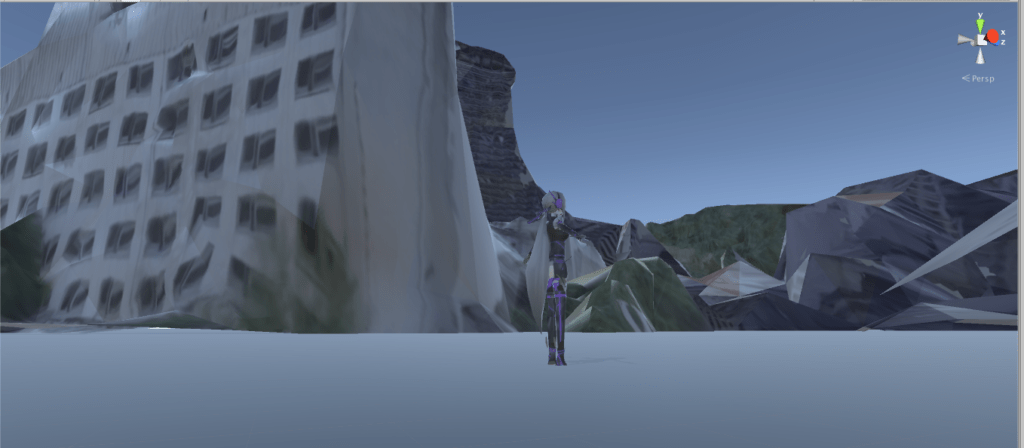

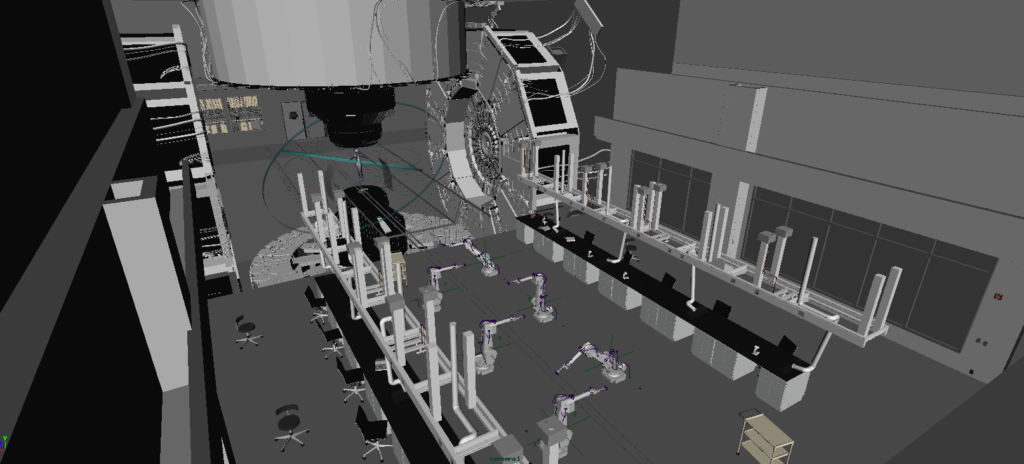

Project H.E.A.R.T. (2017) has joined an interesting collection of VR works on the website radiancevr.co

If you find yourself looking for great examples of VR art, I’d highly recommend browsing the works on this website!

Founded by curators PHILIP HAUSMEIER and TINA SAUERLAENDER

“Radiance is a research platform and database for VR art. Its mission is to present artists working with VR from all over the world to create visibility and accessibility for VR art and for faster adoption of virtual technologies. The platform works closely with artists, institutions and independent curators to select the highest quality of virtual art for public institutional exhibitions.”