Presence

Presence (2020)

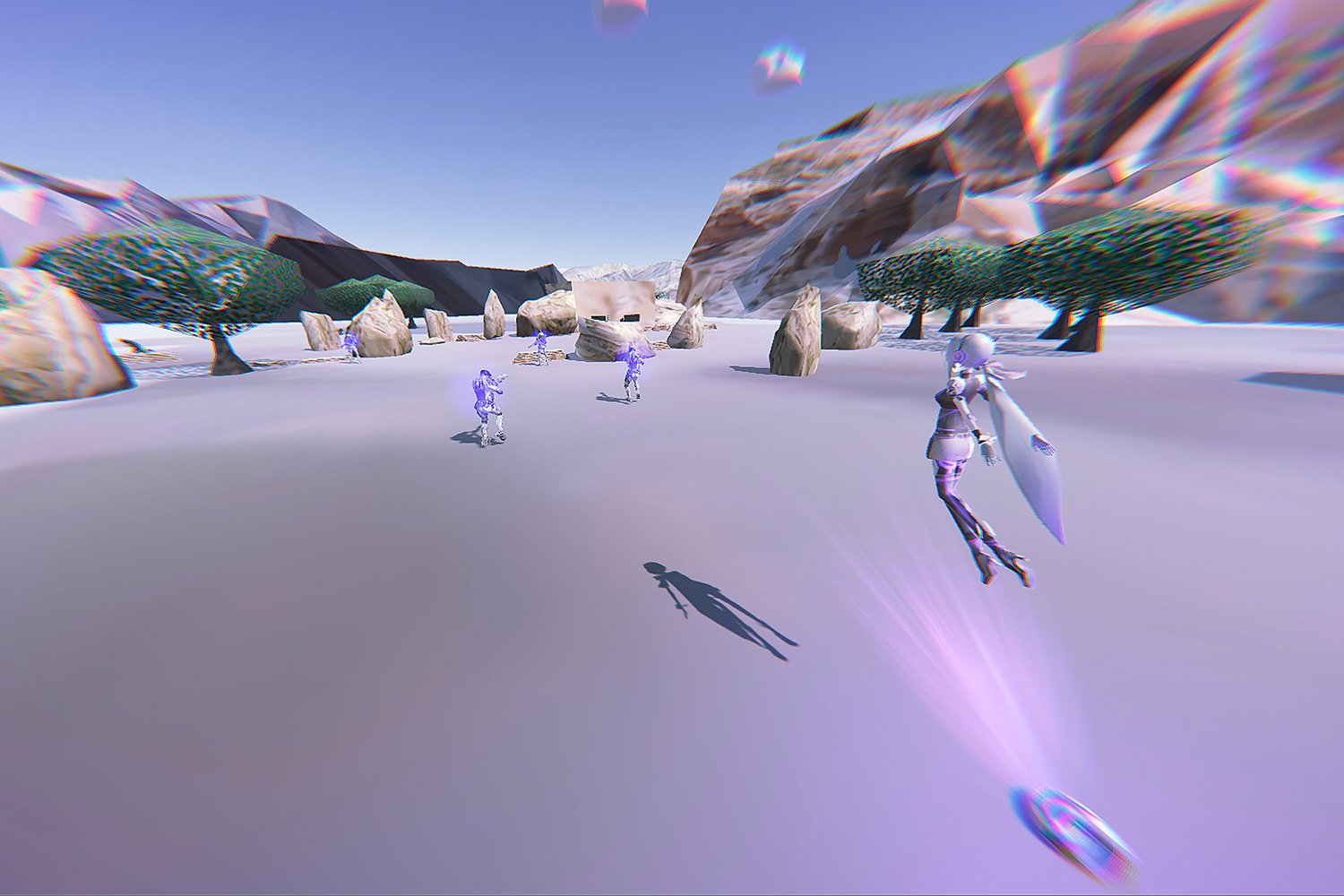

Screen capture from performance at Network Music Festival 2020. Online.

2020

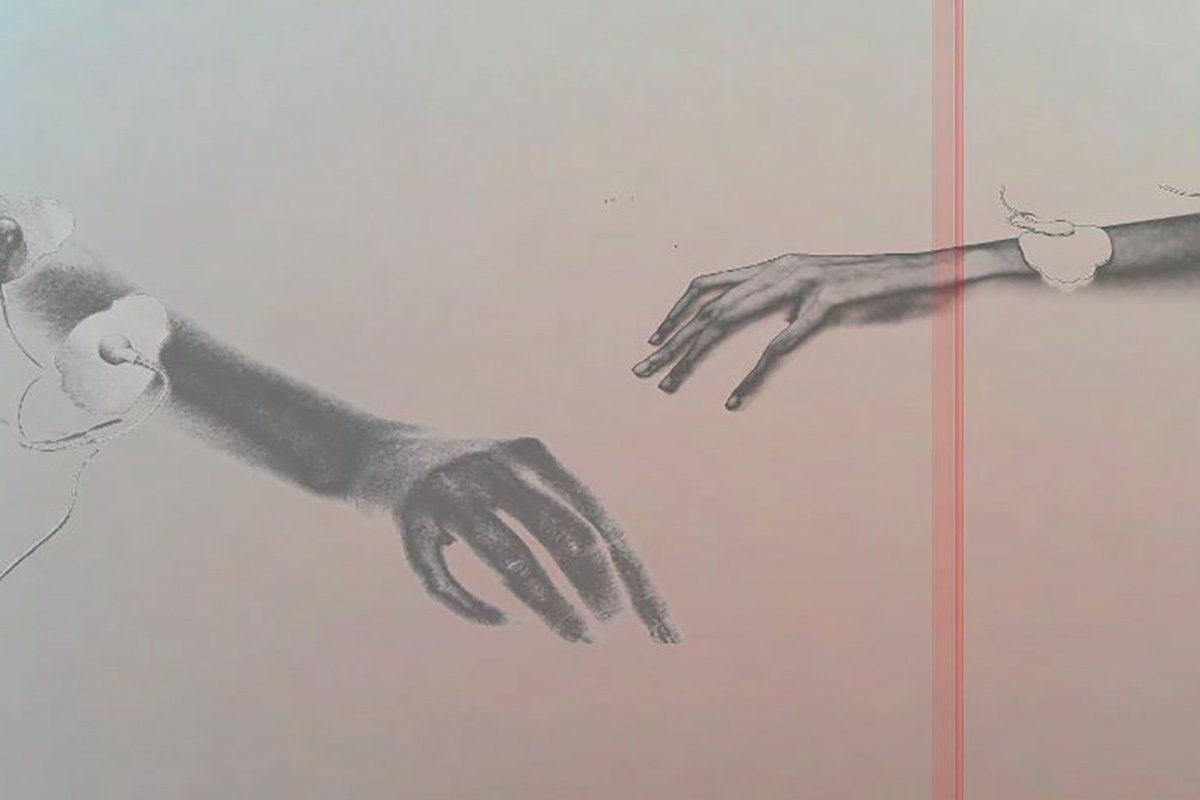

In Presence, artists Erin Gee and Jen Kutler reconfigure voice and touch across the internet through a haptic/physical feedback loop, using affective and physical telematics to structure an immersive electronic soundscape through physiological response.

(March 2020) I was quarantining intensely during the coronavirus pandemic when Jen Kutler reached out to me asking if I would like to collaborate on a new work that simulates presence and attention over the network. We have never met in real life, but we started talking on the internet every day. We eventually built a musical structure that implicates live webcam, endoscopic camera footage, biosensor data, sounds rearranged by biosensor data, ASMR roleplay and touch stimulation devices delivering small shocks to each artist. We developed this work at first through a month-long intensive online residency at SAW Video, while in conversation with many amazing artists, curators and creative people.

Presence is a telematic music composition for two bodies created during the Spring of 2020, at the height of confinement and social distancing during the COVID19 epidemic in Montreal and New York state. This work has been performed for online audiences by both artists while at home (Montreal/New York), featuring Gee and Kutler each attached to biosensors that collect the unconscious behaviours of their autonomic nervous systems, as well as touch simulation units that make this data tactile for each artist through transcutaneous nerve simulation.

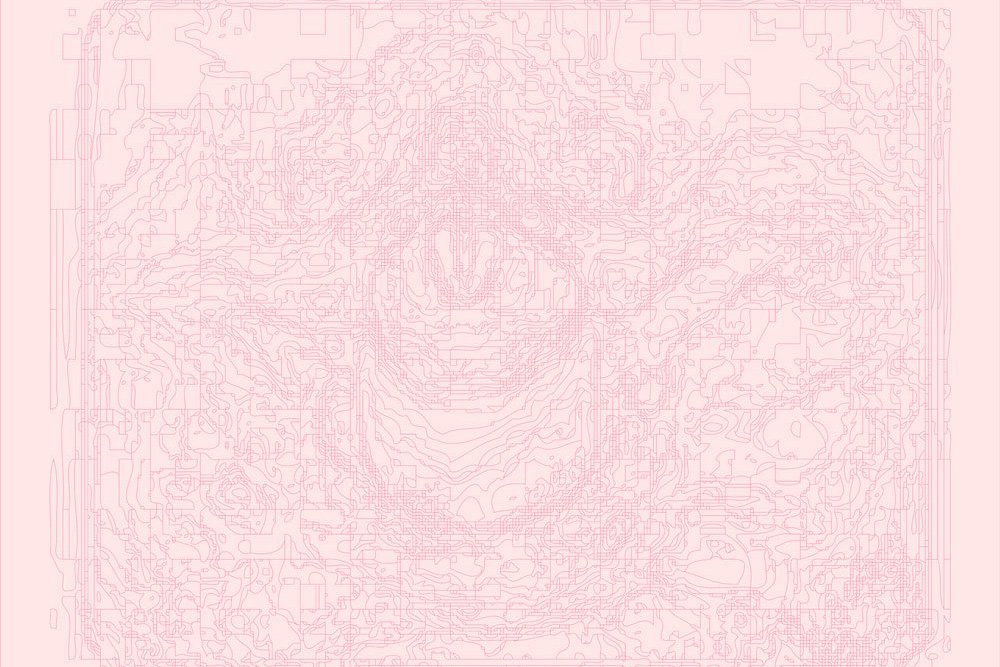

Audiences are invited to listen attentively this networked session for physicalized affect through the sonification of each artists’ biodata, which also slowly triggers an ASMR roleplay that is actively reconfigured by the bodily reactions of each artist. Music and transcutaneous electronic nerve stimulation is triggered by listening bodies: these bodies are triggered by the sounds and electric pulses, everything in the system is unconscious, triggering and triggered by each other through networked delays, but present. Through this musical intervention the artists invite the listeners to imagine the experience and implicate their own bodies in the networked transmission, to witness the artists touching the borders of themselves and their physical spaces while in isolation.

Credits

web socket for puredata (wspd) created for Presence by Michael Palumbo. Available on Github here.

Biodata circuitry and library created by Erin Gee. Available on Github here.

Electronic touch stimulation device for MIDI created by Jen Kutler. Available on Github here.

Performance built with a combination of puredata (data routing), Processing (biodata generated visuals), Ableton Live (sounds) and OBS (live telematics) by Erin Gee and Jen Kutler.

Presence was created in part with the support from SAW Video artist-run centre, Canada.

Exhibition/Performance history

SAW Video “Stay at Home” Residency March-April 2020

Network Music Festival July 17 2020

Fonderie Darling – As part of Allegorical Circuits for Human Software curated by Laurie Cotton Pigeon. August 13 2020

Video

Presence (2020)

Performance by Erin Gee and Jen Kutler at Network Music Festival.