La Lumière MBASMR

La Lumière MBASMR (2022)

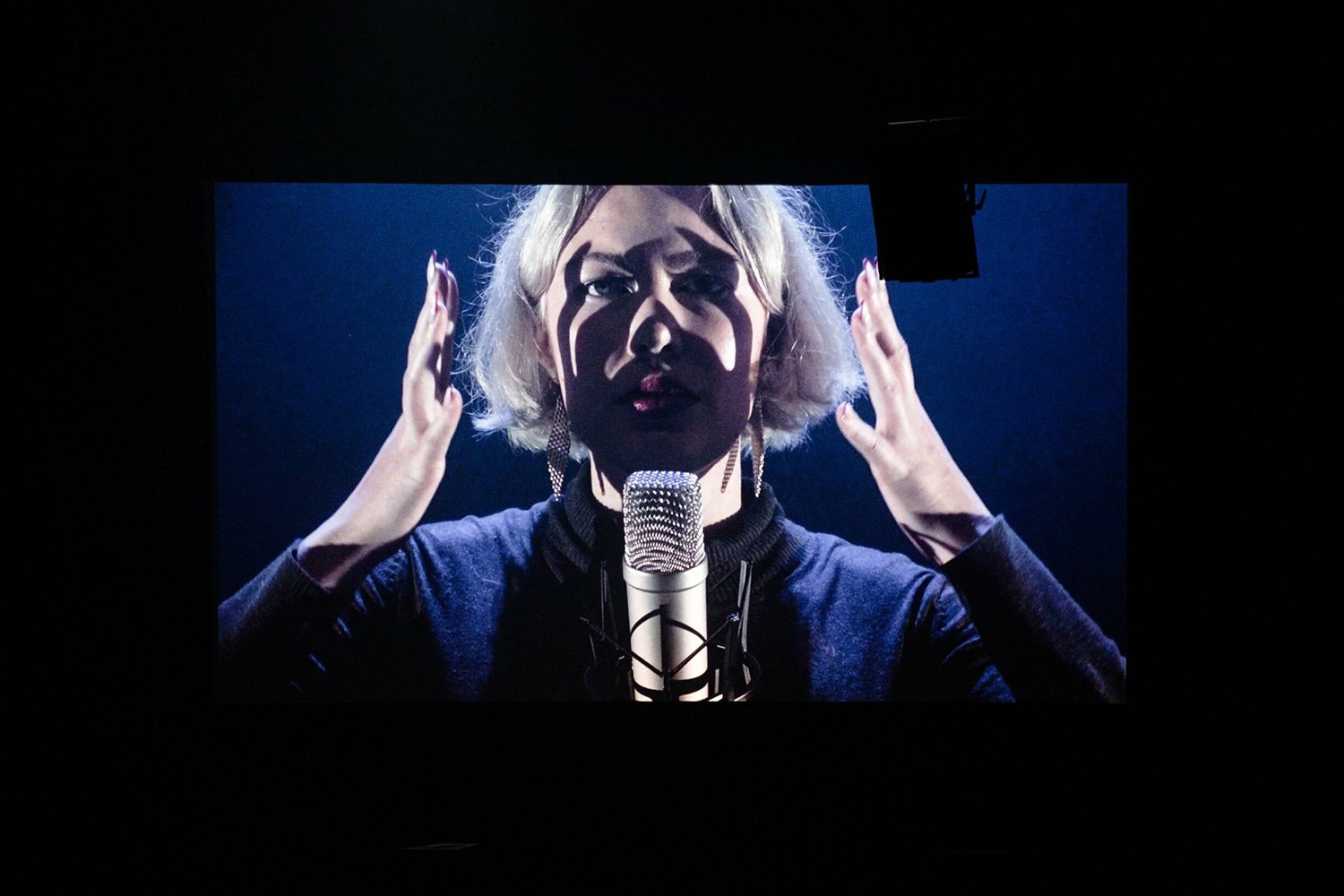

Performance

2022

In 2022, Canadian composer and media artist Erin Gee was commissioned by the Broodthaers Society of America (NYC) to perform a remote concert inspired by 20th century Belgian conceptual artist Marcel Broodthaers’ La Lumière Manifeste.

The performance was part of the programming celebrating a new vinyl album of Broodthaers reading some of his late poetry recently unearthed and remastered by Raf Wollaert (Doctoral Fellow at the University of Antwerp). Gee uses the original French versions of Marcel Broodthaers’ poems as the basis for her online multimedia song book, aided by English subtitles, the tools and gestures of ASMR, and the inherent immersion and drift of the internet.