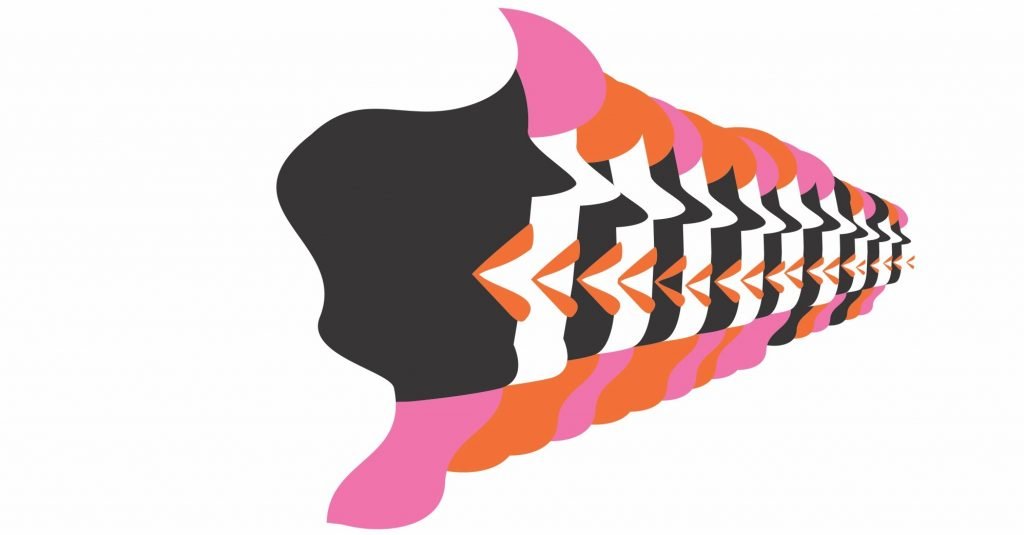

Review: Akimblog, Canada

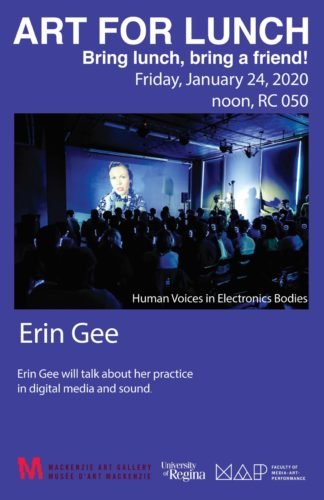

The first review for my solo exhibition To the Sooe at the MacKenzie Art Gallery is here! To the Sooe is on view until April 19th in Regina, Canada.

“Gee delivers the output in ASMR style through role play and a sound performance that leave you both mesmerized and tingling to your core. The sterile white walls and scientific jargon of the exhibition texts should not deter you from this immersive and sensory experience. Gee’s complex communication configurations require your time, patience and an open mind.” -Alexa Heenan, Akimblog