ASAP Journal

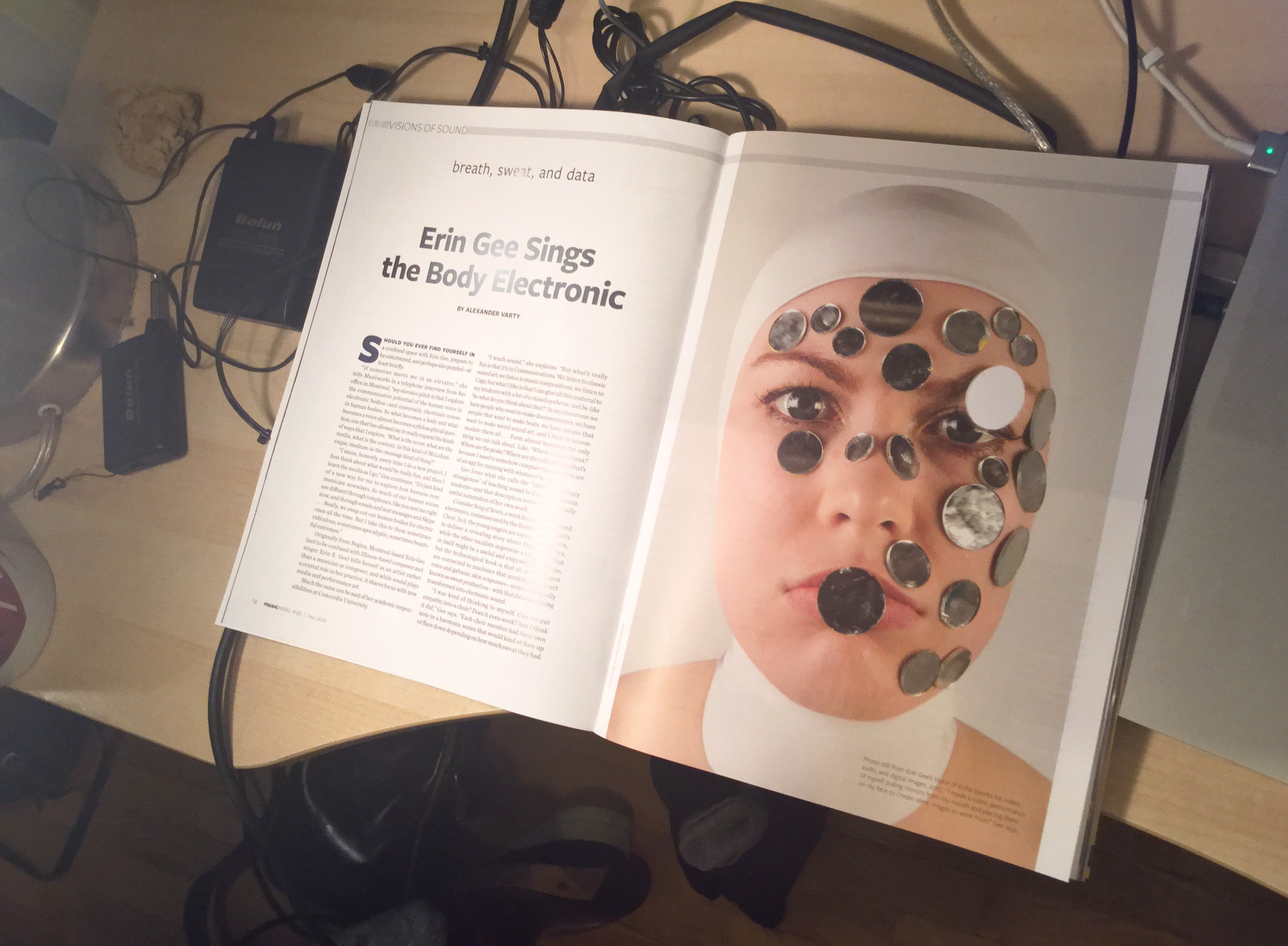

Happy to announce that my short article on machine learning, ASMR and sound “Automation as Echo” written with Sofian Audry is now published in ASAP/Journal 4.2 in a collection of articles assembled by Jennifer Rhee covering automation from diverse/creative/critical perspectives.

From the article:

“The echo is a metaphor that goes beyond sound, speaking to the physical and temporal gaps in human-computer interaction that open up a space of aesthetic consumption problematized by the impossibility of comprehending machine perspectives on human terms. The echo unfolds in time, but most importantly it unfolds in space: sound travels as a physical interaction between a subject and an object that seemingly “speaks back.”

The mythological nymph Echo “speaks” or “performs” her subjectivity through reflection or imitation of the voice of human Narcissus. Her (incomplete, sometimes humorous, sometimes uncannily resemblant) nonhuman voice is dependent on the human subject, who is also the progenitor of her speech. The relationship between these two mythological entities creates an apt metaphor for machine learning: its processes are not of the human, yet its “neural” functions are crafted in imitation of and in response to human thought. As machine subjectivity is crafted from human subjectivity, we cannot grasp its machined voice, nor perceive its subjective position, through analysis of its various textual, sonic, visual, and robotic outputs alone. Rather, the “voice” of machine learning is fleeting, heard through the spaces, the gaps, the movements between the machine and the human, the vibrational color of nonhuman noise.”

ABOUT ASAP JOURNAL

ASAP/Journal is a peer-reviewed scholarly journal published by John Hopkins University Press that explores new developments in post-1960s visual, media, literary, and performance arts. The scholarly publication of ASAP: The Association for the Study of the Arts of the Present, ASAP/Journal promotes intellectual exchange between artists and critics across the arts and humanities. The journal publishes methodologically cutting-edge, conceptually adventurous, and historically nuanced research about the arts of the present.